AI sign-language recognition has advanced considerably with computer vision, enabling real-time translation of common signs and capturing basic gestures accurately. However, challenges remain in recognizing subtle nuances, regional dialects, and cultural variations. Gesture variability, ambiguous signs, and emotional cues make it complex to achieve seamless, universal understanding. Developers are working on solutions like diverse datasets and multimodal systems to improve reliability. Keep exploring to discover how these innovations are shaping the future of accessible communication.

Key Takeaways

- AI can recognize basic signs and support real-time translation but struggles with gesture variability and cultural differences.

- Recognizing subtle nuances, emotional context, and regional dialects remains a significant challenge for current systems.

- Diverse and extensive datasets, including multimodal and contextual data, are essential to improve accuracy and adaptability.

- Cross-language and cross-cultural sign recognition require advanced models trained on multilingual, region-specific data.

- Ongoing innovations aim to enhance robustness, personalization, and real-world usability, despite existing technical and dataset limitations.

How AI Recognizes Sign Language Using Computer Vision

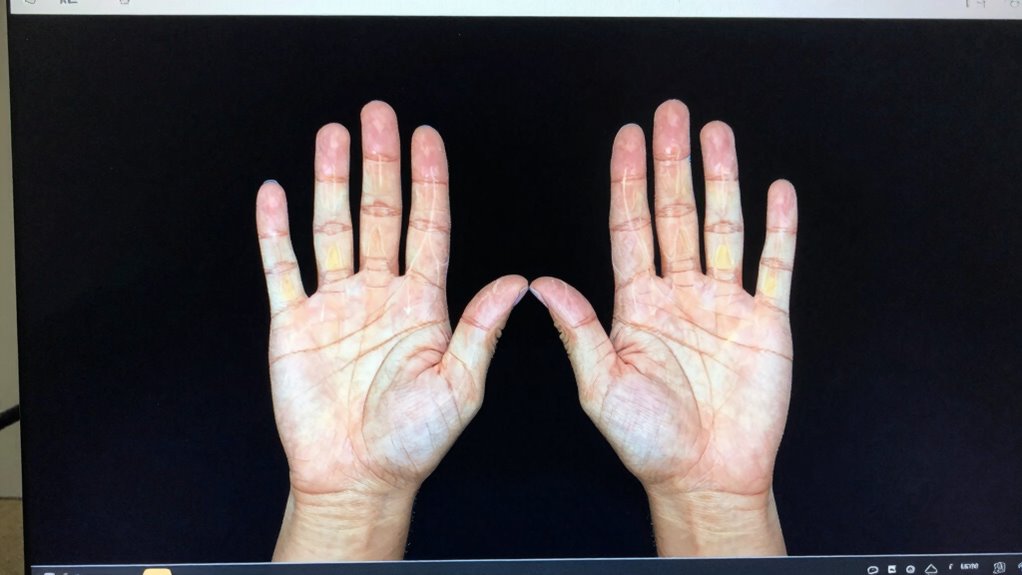

AI recognizes sign language through computer vision by analyzing video data to identify hand shapes, movements, and facial expressions. To do this effectively, it relies on gesture segmentation, which isolates individual signs from continuous gestures. This process helps the system distinguish where one sign ends and another begins, ensuring accurate interpretation. Furthermore, dataset diversity plays a vital role, as varied data from different signers, environments, and lighting conditions enhance the AI’s ability to generalize and accurately recognize signs across users. By training on diverse datasets, the system becomes more robust and less biased, improving overall performance. A diverse dataset also reflects different sign language dialects, helping the AI adapt to various regional and cultural variations. Incorporating dataset augmentation techniques can further improve recognition by simulating different conditions and variations. This combination of gesture segmentation and dataset diversity allows AI to better understand the nuances of sign language, making recognition more precise and adaptable, especially when considering training data quality. Additionally, ongoing research aims to address bias reduction to ensure fair and equitable recognition across diverse populations.

What Are the Current Capabilities of AI Sign-Language Translation Systems?

Have you ever wondered how close we are to real-time sign-language translation? Current AI systems can now recognize basic signs with impressive gesture accuracy, enabling faster communication. These systems are improving in adapting to different users, learning individual signing styles for better accuracy over time. You’ll find that:

- Gesture accuracy varies depending on the complexity of signs

- Many systems support real-time translation for common phrases

- User adaptation helps tailor recognition to individual signing styles

- Continuous updates enhance system flexibility and reliability

- Advances in European cloud infrastructure are supporting scalable and secure deployment of these AI translation systems

While these advances are promising, some challenges remain in handling diverse sign languages and subtle nuances. Still, the progress in gesture accuracy and user adaptation means AI is becoming increasingly capable of bridging communication gaps for sign-language users.

Challenges in Capturing Nuances and Variations in Sign Language Recognition

Capturing the subtleties and variations in sign language remains a significant hurdle for recognition systems. Gesture variability makes it difficult because individuals perform signs differently based on personal style, speed, and context. These differences complicate AI models that rely on consistent patterns. Cultural differences further challenge recognition, as signs can vary widely across regions and communities, altering hand shapes, movements, and facial expressions. This variability means that a system trained on one dialect or regional style may struggle to accurately interpret signs from another. To overcome this, AI must learn to adapt to diverse gestures and cultural nuances, which requires extensive, inclusive data. Additionally, Free Floating techniques could help models better interpret signs without strict positional constraints. Without addressing these factors, sign language recognition systems risk misinterpretation and reduced accuracy, limiting their effectiveness across different user groups. Developing adaptive learning methods can enable systems to improve over time as they encounter new sign variations.

Handling Ambiguous and Complex Gestures With AI

Handling ambiguous and complex gestures presents a unique challenge for sign language recognition systems. Gesture ambiguity often leads AI to misinterpret signs, especially when gestures resemble others or are performed hurriedly. Emotional context adds another layer of complexity, as facial expressions and body language influence meaning. To address these issues, AI systems:

Handling ambiguous gestures requires advanced AI to interpret subtle differences and emotional cues accurately.

- Use advanced algorithms to distinguish subtle differences in gesture execution

- Incorporate emotional cues to interpret signs more accurately

- Leverage multi-modal data, combining hand movements with facial expressions

- Improve training datasets with diverse, real-world examples of ambiguous gestures

- Consider ventilation considerations to ensure proper sensor placement and system performance in various environments

- Emphasize the importance of training data diversity to enhance AI understanding of real-world variability and improve accuracy in complex scenarios. Additionally, ongoing research focuses on sensor placement optimization to reduce errors caused by environmental factors and improve system robustness. Recognizing the significance of sensor calibration is essential for maintaining detection accuracy across different settings. Incorporating robust sensor calibration techniques can further enhance system reliability in diverse conditions.

How AI Recognizes Different Sign Languages Across Languages

AI recognizes different sign languages across languages by analyzing unique gestures, facial expressions, and contextual cues specific to each system. Multilingual models help achieve this by training on diverse datasets, allowing the AI to distinguish subtle variations influenced by cultural differences. These models adapt to the nuances that vary between regions, such as hand shapes, movement patterns, or facial expressions. To illustrate, consider the following differences:

| Sign Language | Cultural Influence | Key Feature |

|---|---|---|

| ASL | Western culture | Facial expressions emphasize emotions |

| BSL | British culture | Specific hand configurations |

| LSF | French culture | Unique movement sequences |

This approach enables AI to recognize multiple sign languages, but cultural differences still pose challenges for universal understanding. Recognizing regional variations is essential for creating more accurate and inclusive sign language recognition systems. Additionally, ongoing research aims to address cultural nuances to improve system robustness across diverse populations.

Improving Accuracy and Context in Sign-Language Recognition

Enhancing accuracy and contextual understanding in sign-language recognition requires sophisticated algorithms that analyze not just isolated gestures but also the surrounding environment and conversational cues. By addressing gesture complexity and capturing emotional context, AI systems become more reliable. To improve, focus on:

- Integrating multi-modal data like facial expressions and body language

- Developing models that interpret emotional cues alongside gestures

- Using contextual information to disambiguate similar signs

- Training on diverse datasets to handle gesture variations and nuances

- Applying attention to detail in testing processes ensures the system accurately captures subtle differences in signs and expressions. Incorporating outdoor wisdom and tech can provide insights into real-world variability, further enhancing robustness. These strategies help AI better understand the full meaning behind signs, especially in natural conversations. Recognizing emotional context adds depth, making interactions more genuine. Additionally, leveraging cross-disciplinary approaches can accelerate progress by integrating insights from linguistics, psychology, and computer science. Overall, refining these aspects pushes sign-language recognition closer to real-world, practical use.

Limitations of AI Sign-Language Tools in Real-World Use

Despite significant progress, AI sign-language tools still face notable challenges when applied in real-world settings. Gesture variability makes it difficult for algorithms to recognize signs consistently, especially when users have different signing styles or hand movements. Cultural differences add complexity, as gestures vary widely across communities and regions, which AI models may not fully understand. These limitations impact accuracy and usability outside controlled environments. Additionally, understanding the intricacies of visual perception remains a core hurdle for improving recognition systems in diverse conditions. Moreover, the variety of styles and techniques used by sign language users further complicates the development of universally effective AI models. Recognizing cultural context is essential for creating more adaptable and inclusive recognition systems. Improving training data diversity is also crucial to help AI systems better adapt to the wide range of signing behaviors encountered globally. Addressing visual variability through advanced algorithms could further enhance robustness across different scenarios.

Future Directions for More Robust Sign-Language Recognition

To develop more robust sign-language recognition systems, researchers are exploring advanced machine learning techniques, including deep learning models that can better handle gesture variability and cultural differences. By focusing on multimodal integration, these systems combine visual, contextual, and motion data for improved accuracy. Future efforts also emphasize cultural adaptation, enabling models to recognize regional signs and dialects effectively. This approach helps reduce misinterpretations across diverse sign languages, especially when considering sign language dialects and regional variations. You can expect innovations like adaptive algorithms that learn from user interactions and contextual cues. Additionally, integrating sensors with visual data will enhance recognition in complex environments. Furthermore, leveraging a variety of wood types and design elements can inspire the development of more durable and aesthetically pleasing sign-language tools. These directions aim to make sign-language tools more reliable and inclusive, bridging gaps for users worldwide. The focus remains on creating adaptable, culturally sensitive systems that work seamlessly across different communities.

How Developers Are Overcoming AI Sign-Language Challenges

Developers are actively addressing the challenges in AI sign-language recognition by designing innovative solutions that improve system accuracy and reliability. They are expanding and diversifying gesture datasets to ensure models can recognize a wide range of signs across different users. By incorporating large, varied datasets, systems become more adaptable to individual signing styles and regional variations. User adaptation techniques also play a key role, allowing AI models to learn from specific users over time, enhancing personalized accuracy. Developers leverage transfer learning and continuous training to improve recognition performance, even with limited data. These strategies collectively help overcome variability in signs, hand shapes, and motion, making sign-language recognition more practical and accessible for diverse users.

Frequently Asked Questions

How Do AI Systems Differentiate Similar Signs in Different Contexts?

You can improve AI systems’ ability to differentiate similar signs by focusing on contextual disambiguation and gesture variability. The AI analyzes surrounding words, facial expressions, and body language to understand the sign’s meaning in a specific situation. It also learns from diverse gesture variations, making it more accurate across different users and contexts. This way, the AI adapts to subtle differences and correctly interprets signs in real-world conversations.

Can AI Recognize Sign Language From Live Video Feeds Effectively?

Yes, AI can recognize sign language from live video feeds effectively, but its success depends on gesture accuracy and real-time processing. You’ll see improved results with advanced algorithms that interpret gestures quickly and precisely. While some challenges remain, especially with complex signs or poor lighting, current AI systems are increasingly capable of providing near-instantaneous recognition, making real-time communication more accessible for sign language users.

What Are the Ethical Considerations in AI Sign-Language Technology?

You need to contemplate privacy concerns when developing AI sign-language tech, ensuring users’ data stays secure and confidential. Bias mitigation is also vital, as algorithms may unintentionally favor certain signers or dialects, leading to unfair results. Ethically, you should promote transparency about how data is used and aim for inclusive design, so the technology respects all users’ identities and communication styles while safeguarding their rights.

How Do Cultural Differences Affect AI Sign-Language Recognition Accuracy?

Have you ever wondered how cultural differences impact AI sign-language recognition? They substantially affect accuracy because cultural nuances and gesture variations vary across regions. AI systems trained on one community’s signs may struggle with another’s, leading to misinterpretations. To improve, developers must incorporate diverse datasets reflecting global sign-language variations, ensuring the AI recognizes gestures accurately regardless of cultural context. Wouldn’t you want inclusive technology that understands everyone’s signs?

Are There Open Datasets Available for Training Sign-Language AI Models?

You’ll find open datasets for training sign-language AI models, like the RWTH-PHOENIX and ASL datasets, which are publicly available. To improve your model, consider using data augmentation techniques to expand limited datasets and enhance accuracy. These datasets provide a solid foundation for training, but keep in mind that cultural differences may still impact recognition accuracy, so ongoing adjustments and diverse data are key to success.

Conclusion

Think of AI sign-language recognition as a brave explorer charting a vast, uncharted jungle. While it’s made exciting discoveries and mapped many paths, some trails remain hidden or tangled. With each challenge you face, you’re helping this explorer grow more skilled and confident. Together, you’re transforming an uncertain wilderness into a clear, accessible trail for everyone, opening a future where communication knows no barriers.