Robots learning by watching humans use a simple yet powerful idea: they observe our actions, gestures, and cues to acquire new skills naturally. This approach leverages technologies like vision processing, imitation learning, and sensor integration to interpret behaviors without explicit programming. It makes interactions more intuitive and adaptable. As this field advances, future innovations will enhance their understanding and safety. Keep exploring to see how this exciting method is shaping our robotic future.

Key Takeaways

- Robots learn new skills by observing and mimicking human actions through visual and sensory data analysis.

- Imitation learning enables robots to acquire behaviors without explicit programming, simplifying skill transfer.

- Incorporating human gestures, cues, and context allows robots to understand and replicate complex tasks naturally.

- Advanced perception and multimodal sensors help robots interpret dynamic, unstructured environments effectively.

- Ethical frameworks and human oversight ensure responsible and safe learning from human demonstrations.

How Do Robots Learn Skills by Watching Humans?

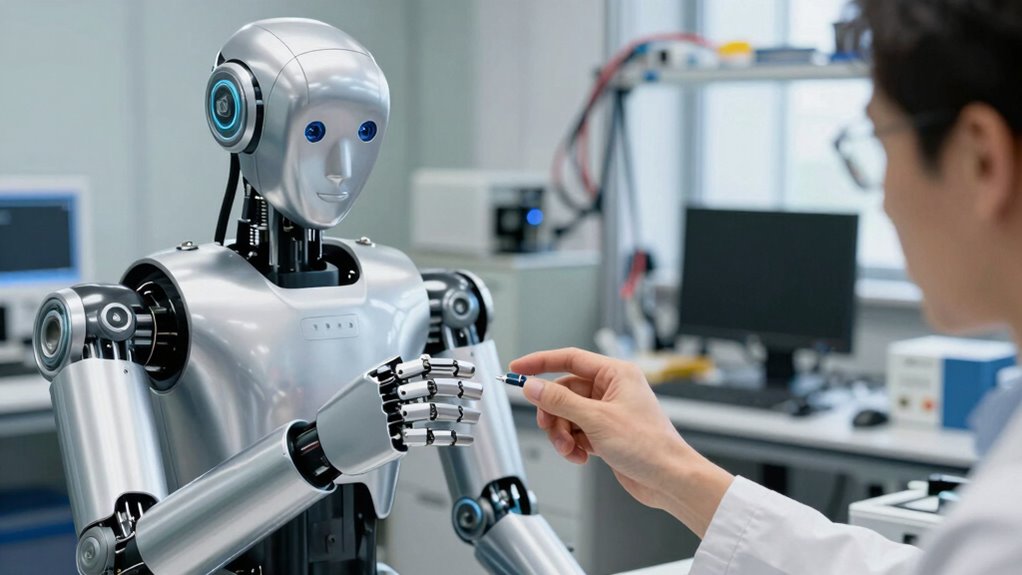

Robots learn skills by watching humans through a process called imitation learning. You might wonder how they grasp complex tasks, but it’s about more than copying movements. Robots observe human actions closely, analyzing gestures, timing, and context to replicate behaviors accurately. As they do, developers consider robot empathy—how robots interpret human emotions and responses—to make interactions more natural. Moreover, integrating European cloud solutions can enhance data security and energy efficiency in robotic systems. This integration also facilitates real-time data processing, allowing robots to adapt quickly to new situations. Additionally, ongoing research into machine learning algorithms helps improve how effectively robots can understand and mimic human behaviors. Incorporating ethical considerations is essential to ensure responsible development and deployment of these intelligent systems. Ethical frameworks guide the design of robots to prevent harmful behaviors and ensure they act within moral boundaries. However, ethical considerations arise, like ensuring robots don’t misuse the information they gather or mimic harmful behaviors. Your role in designing these systems involves balancing technological capabilities with ethical boundaries, ensuring robots learn safely and responsibly. This approach allows robots to adapt and improve, but always under human oversight to prevent unintended consequences. Your vigilance helps foster trust and safety in these learning machines.

The Tech That Helps Robots Learn From Demonstrations

You can see how visual data processing allows robots to interpret human actions accurately. Imitation learning techniques then help these machines replicate skills effectively. These technological advances are transforming real-world applications, making robots more adaptable and capable. Understanding AI’s potential is essential for grasping how these developments will shape our future interactions with automation. Additionally, advancements in machine perception enable robots to better analyze and respond to complex environments, further enhancing their learning capabilities. As these systems become more sophisticated, they rely increasingly on sensor integration to gather diverse information from their surroundings.

Visual Data Processing

Visual data processing serves as the foundation for robots to learn effectively from human demonstrations. It allows robots to interpret visual cues like gestures, which are vital for understanding human intent. Sensory integration combines visual input with other senses, helping robots create a thorough understanding of their environment. This process involves advanced algorithms that analyze movement patterns, recognize gestures, and track objects in real time. Here’s a quick overview:

| Feature | Function |

|---|---|

| Gesture recognition | Identifies hand signals and body language |

| Sensory integration | Combines visual cues with tactile and auditory data |

| Object tracking | Monitors movements of objects and humans |

| Pattern analysis | Recognizes familiar gestures and behaviors |

This tech empowers robots to observe, interpret, and respond more naturally, making their learning more effective. Additionally, Free Floating concepts enable robots to adapt to diverse environments without relying on fixed support structures. Moreover, advancements in visual data processing have improved robots’ ability to learn from unstructured and dynamic settings, further enhancing their adaptability.

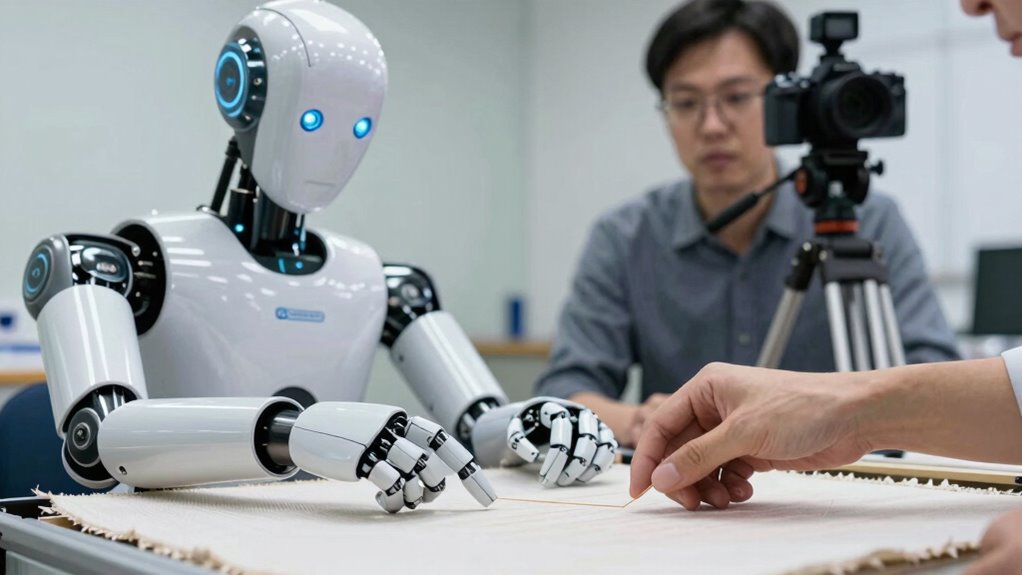

Imitation Learning Techniques

Imitation learning techniques enable robots to acquire new skills efficiently by directly mimicking human demonstrations. These methods allow robots to learn complex tasks without explicit programming, making them adaptable to various environments. As you explore these techniques, you’ll see how different programming paradigms—like supervised learning and reinforcement learning—are applied to teach robots through observation. Additionally, integrating ethical considerations into robot training ensures that robots learn safely and responsibly, respecting human boundaries and societal norms. By focusing on imitation learning, you can reduce the complexity of programming, allowing robots to grasp nuanced behaviors naturally. This approach not only accelerates skill acquisition but also paves the way for more intuitive human-robot interactions, making robots more accessible and aligned with body-related safety standards in their development.

Real-World Application

Advancements in technology have made it possible for robots to learn from human demonstrations in real-world settings, transforming theoretical techniques into practical tools. These innovations improve human-robot interaction, making robots more adaptable and intuitive. By observing and mimicking human actions, robots can perform complex tasks efficiently. Additionally, learning algorithms are continuously evolving to enhance robot capabilities and safety measures. This ongoing development supports adaptive learning in robots, allowing them to better respond to unpredictable environments. However, integrating this tech raises questions about robot ethics, especially regarding safety and responsibility. To illustrate, here’s a quick comparison:

| Aspect | Consideration |

|---|---|

| Learning Method | Observation-based, real-time adaptation |

| Ethical Concern | Ensuring safety and accountability |

| Application | Manufacturing, healthcare, service robots |

This technology bridges the gap between humans and robots, fostering cooperation while emphasizing the importance of responsible development. As these systems become more autonomous, ongoing discussions about their ethical use are essential to ensure beneficial outcomes for society. Additionally, learning algorithms are continuously evolving to enhance robot capabilities and safety measures. Furthermore, such advancements contribute to the growth of artificial intelligence, paving the way for more sophisticated and reliable robotic systems.

Why Teaching Robots by Watching Is Better Than Coding

Teaching robots by watching humans often proves more effective than traditional coding because it allows them to grasp complex, real-world behaviors directly from observation. This approach reduces programming complexity, making it easier to develop adaptable and versatile robots. Instead of painstakingly coding every possible action, you let the robot learn naturally, which aligns better with robot ethics by minimizing the risk of unintended consequences from rigid instructions. Watching humans perform tasks enables robots to understand nuances and context that are difficult to encode explicitly. As a result, you avoid over-simplifying behaviors or missing critical details, fostering more responsible and ethical robot development. This method streamlines learning, promotes flexibility, and respects the evolving nature of human-robot interactions.

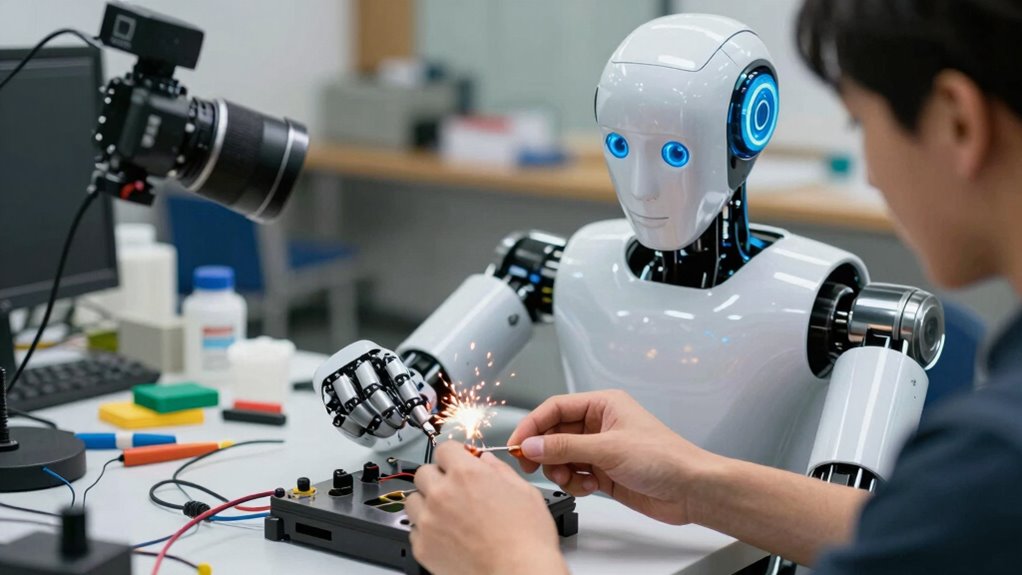

Examples of Robots Learning Tasks From People

Many robots are now learning tasks directly from people by observing their actions in real-world settings. For example, robots can watch humans cook, clean, or assemble objects, then imitate these tasks. They use techniques like robot storytelling to better understand human intentions and improve their performance. Emotion recognition helps robots interpret facial expressions and subtle cues, making their responses more natural and effective. Some robots are trained to assist in healthcare by observing caregivers and patients, adapting their actions accordingly. Others learn household chores by watching tutorials or live demonstrations. These examples show how robots shift from rigid programming to flexible, observational learning, making their integration into daily life smoother and more intuitive. Additionally, incorporating auditory processing techniques can further enhance robots’ ability to interpret human speech and sounds, leading to more seamless interaction. Moreover, advances in sensor technology allow robots to gather richer contextual information during observation, significantly boosting their learning accuracy. This approach highlights the power of learning through observation and machine learning algorithms, enhancing robot versatility and usefulness. Furthermore, ongoing research into contextual understanding enables robots to better adapt to complex, dynamic environments, making their learning process even more efficient. Expanding on this, integrating multimodal sensory data can help robots form a more comprehensive understanding of human actions and environments.

What Challenges Do Robots Face When Learning by Watching?

Learning by watching presents significant challenges for robots because interpreting human actions and intentions isn’t straightforward. Sensor limitations make it hard for robots to capture all details accurately, especially in dynamic environments. Data ambiguity adds to the difficulty, as the same action can have multiple meanings depending on context. For example:

| Challenge | Explanation |

|---|---|

| Sensor limitations | Incomplete or noisy data from cameras and sensors |

| Data ambiguity | Misinterpreting actions due to multiple possible meanings |

| Context understanding | Struggling to grasp the situational relevance |

| Temporal gaps | Missing parts of sequences that define actions |

| Variability | Different ways humans perform the same task |

These hurdles require advanced processing and contextual awareness for effective learning. Moreover, improving sensor technology can help mitigate some of these issues by providing clearer data. Additionally, developing effective training techniques remains crucial to overcoming these obstacles.

The Future of Robots That Learn Just by Watching

As technology advances, robots that learn by watching are poised to become more intuitive and adaptable, transforming how they integrate into daily life. In the future, these robots will better understand human behaviors, making interactions seamless and natural. However, ethical considerations will play a significant role in their development, ensuring privacy and consent are prioritized. Improvements in user interface design will make it easier for you to teach and communicate with robots. You can expect:

- Smarter, more personalized robot responses

- Enhanced transparency about data usage

- Safer, ethically guided learning processes

These advancements will make robots more trustworthy partners, capable of learning from watching while respecting your privacy and preferences. The future holds exciting possibilities for human-robot collaboration.

Frequently Asked Questions

Can Robots Learn Complex Skills Solely Through Observation?

Yes, robots can learn complex skills solely through observation thanks to advances in machine learning and robotic cognition. When you enable a robot to watch humans, it uses algorithms to analyze actions, recognize patterns, and adapt its behavior accordingly. This process allows the robot to develop sophisticated skills without explicit programming, making learning more natural and efficient. With ongoing research, robots are becoming increasingly capable of mastering complex tasks through simple observation.

How Do Robots Interpret Human Gestures Accurately?

You enhance robot accuracy by focusing on flawless gesture recognition through sophisticated visual processing. When you train robots, you enable them to interpret human gestures by analyzing visual cues with advanced algorithms, ensuring they understand subtle movements. By combining precise visual processing with pattern recognition, robots can quickly and correctly interpret gestures, making interactions more intuitive and natural. This seamless understanding strengthens human-robot collaboration and communication.

Are There Ethical Concerns With Robots Learning From Humans?

Yes, there are ethical concerns with robots learning from humans. You should consider privacy concerns, as robots might collect personal data without clear consent. Consent issues arise if people aren’t fully aware their actions are being used to train robots. It is crucial to establish transparent guidelines and ensure individuals agree to how their behavior is observed and used, protecting their rights and fostering trust in these technologies.

What Industries Could Benefit Most From This Learning Approach?

Imagine a master chef watching a new apprentice; you’d see how they slice, season, and serve. Similarly, industries like manufacturing automation and healthcare robotics could thrive, as robots learn precise tasks by observing humans. This approach accelerates skill transfer, reduces errors, and adapts quickly to new procedures, making these sectors more efficient. Your role becomes guiding these robots, turning observation into mastery for improved productivity and care.

How Long Does It Typically Take for a Robot to Learn New Tasks?

The training duration for a robot to learn new tasks varies, but with this approach, you typically see faster learning speed compared to traditional methods. It can take anywhere from a few hours to several days, depending on task complexity and data quality. Your robot rapidly adapts by observing human actions, making the learning process more efficient. Overall, expect quicker training durations and improved learning speed with this innovative method.

Conclusion

Now that you see how simple yet powerful watching humans teach robots, you can believe in a bright, bold future. By blending basic behaviors with breakthrough technology, robots become rapidly reliable. Watching, learning, and mimicking make machines more manageable and meaningful. So, stay hopeful as this fascinating fusion fosters friendly, flexible robots that learn effortlessly, opening endless exciting possibilities. Embrace the evolution, and witness a world where robots watch, learn, and work wonderfully with you.